As of the November 2019 rankings, Cannon has ranked #144 on the Top500 list!

For current cluster details, please see: https://www.rc.fas.harvard.edu/about/cluster-architecture/

For current partition configurations and changes, please see the Running Jobs page

The lease on the Odyssey compute cluster comes to an end this year, and a hardware refresh of the cluster will occur in September.

Current ETA for switching over is Tuesday, September 24th, 2019 (note date change).

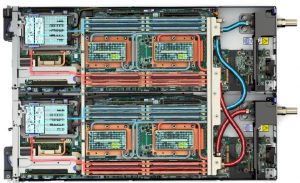

Our new cluster will be provided by Lenovo and utilize their SD650 NeXtScale servers with direct-to-node water-cooling for increased performance, density, ease of expansion, and controlled cooling.

These units contain two nodes per 1U rack space, so this will change the purchasing model for those who wish to have dedicated hardware. Because of the new cooling system, new nodes will only be added at specific times of the year. We will coordinate this with PIs during the purchasing phase for any new hardware.

TIMELINE

CANNON IS LIVE: Sept. 24, 2019

The refreshed cluster, named Cannon in honor of Annie Jump Cannon, is comprised of 670 plus 16 new GPU nodes. This new cluster will have 30,000 cores of Intel 8268 "Cascade Lake" processors. Each node will have 48 cores and 192 GB of RAM. The interconnect is HDR 100 Gbps Infiniband (IB) connected in a single Fat Tree with 200 Gbps IB core. The entire system is water cooled which will allow us to run these processors at a much higher clock rate of ~3.4GHz. In addition to the general purpose compute resources we are also installing 16 SR670 servers each with four Nvidia V100 GPUs and 384 GB of RAM all connected by HDR IB.

Please note that fairshare/billing has been be recalculated so that the new, faster Cascade Lake CPUs are the baseline. This will further reduce the cost of the slower AMD nodes. The Fairshare doc has been updated with these changes.

UPDATE: Odyssey has been decommissioned.

Once roll-out begins, the Odyssey cluster will be drained of jobs over several days and then decommissioned. The partition names will not change (shared, general, etc.) but the numbers concerning core and memory will be updated. The associated architectures will also remain as we will keep a number of AMD nodes in the general partition. The number of AMD nodes will dwindle over time as they are folded into a new academic cluster that is in the works (the General paritition will be closed on October 7th, 2019). A new partition called 'gpu_test' comprised of one of the 16 nodes (and four V100s) will be added to allow very short test jobs (one hour or less).