DOWNTIME COMPLETE

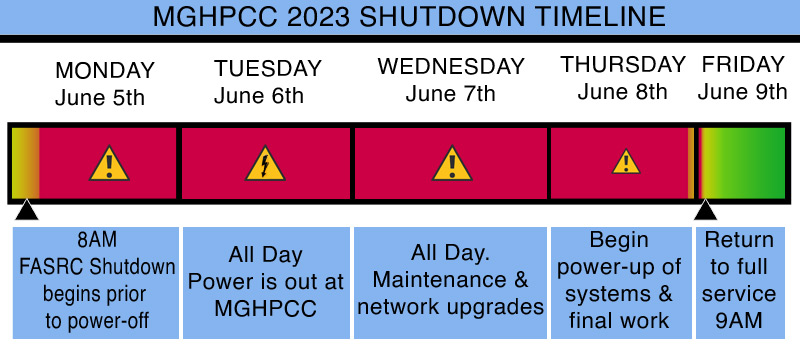

The annual multi-day power downtime at MGHPCC (https://www.rc.fas.harvard.edu/blog/2023-downtime/) is complete (with exceptions noted below). Normal service resumes today (Friday June 9th) at 9am. As noted in previous communications, many changes have been made to the cluster and software. If you run jobs on the cluster and did not previously try out the test cluster, you will need to make adjustments to your workflow.

Exceptions: The gpu_test, fasse_gpu, and remoteviz partitions are not yet available due to unexpected configuration issues. It is our first priority to get them all online again as soon as we can. Other GPU partitions are not affected.

- Any owned nodes that are not available will be cleaned up after we address the above partitions.

- Nodes with GPUs older than V100 may also be down due to being dropped from support by current Nvidia drivers. We will need to address these on a case-by-case basis.

CHANGES

Operating System

The cluster and its login nodes are now on Rocky Linux 8. While this is a CentOS derivative, there are some differences from CentOS 7 that could impact your jobs. Please see our Rocky 8 Transition Guide for more information: https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/

Software

Much has changed with the software offerings on the FASRC cluster. These changes put us more inline with how peer institutions handle software as well as reduces the sheer volume and complexity of modules and versions that FASRC was having to maintain. There are now fewer modules, but more resources and tools for building and installing your own software. Please see: https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/ and https://docs.rc.fas.harvard.edu/kb/software/

Public Partition Time Limits on Cannon

After an analysis (https://www.rc.fas.harvard.edu/blog/cluster-fragmentation/) of run time which found that over 95% of jobs complete within 3 days , we will be changing all the public partitions on Cannon, excluding unrestricted, to a 3 day time limit. To accommodate the 5% of jobs longer than 3 days we will be adding an "intermediate" partition for jobs that need to run between 3 to 14 days; with "unrestricted" handling the rest. Also for new partitions owned by specific groups we will be instituting a default 3 day time limit, existing partitions are not impacted and groups may change this default to suit their needs.

TRAINING/SUPPORT

You will find new training opportunities as well as new user sessions at: https://www.rc.fas.harvard.edu/upcoming-training/

Office Hours resumes Wednesday June 14th. Three additional special office hours have been added for 6/15, 6/22, 6/29.

Please see: https://www.rc.fas.harvard.edu/training/office-hours/

IMPORTANT NOTES BEFORE LOGGING IN

Module Loads/.bashrc

If you load modules in your .bashrc or other startup script, you will likely need to modify this as some modules will no longer be available or will have changed.

You can also remove "source new-modules.sh" from your .bashrc if it is still there.

You _should not_ be loading the old centos6 module as it is full deprecated.

SSH Error:

Logging in via SSH after June 9th you will likely see this error:

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED @

This is because new login nodes will have been deployed but with the same names.

See these instructions for removing the old key fingerprint(s): https://docs.rc.fas.harvard.edu/kb/ssh-key-error/

Conda/Anaconda Python Environments

Due to the OS and other changes under the hood, Conda/Anaconda Python environments will need to be rebuilt. Running environments built under CentOS 7 may produce unexpected results or fail.

Please note that Conda has been replaced with Mamba, a faster but fully Conda command-compatible replacement.

See: https://docs.rc.fas.harvard.edu/kb/python/ and https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/

Login Node Processes - Core and Memory Restrictions

We have moved away from the old pkill process on login nodes which killed processes using too much CPU or memory (RAM) usage to try and maintain a fair balance for all. The Rocky 8 login nodes use a system-level mechanism called cgroups which limits each logged-in account to 1 core and 4GB of memory.

Should you run a process on a login node that runs afoul of cgroup policies, the process will be killed. Please be aware that there is no warning mechanism. Please err on the side of caution when choosing to run something on a login node versus an interactive session.

See: https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/ and https://docs.rc.fas.harvard.edu/kb/responsibilities/

OOD/OpenOnDemand/VDI

Regarding OOD/VDI: The Open OnDemand instances for Cannon (not FASSE) will involve a change to each user's settings and the location of the folder containing those settings and other OOD-specific files. This also means that values you had filled out in the OOD start form will not be retained. Form values will revert to the defaults.

The new location in your Cannon home directory will be ~/.fasrcood

The old folder at ~/fasrc will no longer be used and can be removed.

The location for FASSE settings will not change and will remain at ~/.fasseood

POST-UPGRADE SUPPORT

If you have any further concerns or unanswered questions please visit office hours (https://www.rc.fas.harvard.edu/training/office-hours/) or submit a help ticket (https://portal.rc.fas.harvard.edu/rcrt/submit_ticket) and we will do our best to help. Also please consider attending one of the software trainings if your concern is software-related. https://www.rc.fas.harvard.edu/upcoming-training/

Thanks,

FAS Research Computing

https://www.rc.fas.harvard.edu/

https://docs.rc.fas.harvard.edu/

https://status.rc.fas.harvard.edu/

rchelp@rc.fas.harvard.edu

Original Timeline and Information

The annual multi-day power downtime at MGHPCC is on-going. June 5th-8th with return to full service 9AM Friday June 9th.

During this downtime we will be performing major updates to the cluster's operating system, public partition time limits, and significant changes to our software offerings and procedures. This will affect ALL cluster users and as described below may require you to modify your normal workflows and software chains. Test clusters for Cannon and FASSE (including OOD/VDI) are available. Due to the breadth of these changes, this downtime will also impact almost every service we provide, including most housed in Boston.

- All running and all pending jobs will be purged Monday morning June 5th. All pending jobs or unfinished jobs will need to be re-submitted once the cluster is back up.

- All login nodes will be down for the duration and will have their OS updated to Rocky 8. Once complete, the login process will not change, but the underlying operating system will. Logging in via SSH on or after June 9th, you will likely see WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED

This is because new login nodes will have been deployed but with the same names. See these instructions for removing the old key fingerprint.

- All storage at MGHPCC/Holyoke will be powered down during the outage. Boston storage servers will not be powered down but will likely be unavailable as there will be no nodes available to access them. Some Boston Samba shares may be accessible, but please assume they will also be affected at various times.

OS Update - Rocky Linux

Currently the cluster nodes (Cannon and FASSE), and indeed most of our infrastructure, are built on CentOS 7, the non-commercial version of RedHat's Enterprise Linux. CentOS is being discontinued by RedHat and new development ceased at the end of 2021. As a result, we are moving to Rocky 8 Linux, created by the same people who began CentOS and which much of the HPC community is also getting behind. Given the wide adoption indicated by other HPC sites, we feel confident Rocky Linux is the right choice so that we will be part of a large community where we can both find common support and contribute back.

Rocky Linux will be a major update with significant changes in the same way that our move from CentOS 6 to CentOS 7 was. As such, there will be issues that will affect some pipelines, software, or codes. Additionally, we will necessarily be revamping our software offerings and, concurrently, also giving end-users more control over their own software with new build tools such as Spack.

Rocky 8 Transition Guide: https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/

Please DO expect to see SSH warnings when you log in again after June 8th as all machines including login nodes will have been completely rebuilt. See the FAQ at: https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/#FAQ

Cannon and FASSE OOD (aka VDI)

Regarding OOD/VDI: The Open OnDemand instances for Cannon (not FASSE) will involve a change to each user's settings and the location of the folder containing those settings and other OOD-specific files. This also means that values you had filled out in the OOD start form will not be retained. Form values will revert to the defaults.

The new location in your Cannon home directory will be ~/.fasrcood

Any "development mode" AKA "sandbox" apps that you have installed to ~/fasrc/dev (approximately 78 of you have created these) will no longer be visible through your OOD Dashboard, and will need to be moved to ~/.fasrcood/dev . The old folder at ~/fasrc will no longer be used and can be removed after June 8th.

The location for FASSE settings in your home directory will not change and will remain at ~/.fasseood

Public Partition Time Limits on Cannon

During the summer of 2022 we did an analysis of job run times on the Cannon cluster with the goal of reassessing our existing partition time limits (which are 7 days). A reduced time limit has many benefits such as reduced cluster fragmentation, lower wait times, and short times to drain nodes for service. As a result of this analysis we found that over 95% of jobs complete within 3 days on all the public partitions excluding unrestricted.

We will be changing all the public partitions on Cannon, excluding unrestricted, to a 3 day time limit. To accommodate the 5% of jobs longer than 3 days we will be adding an intermediate partition for jobs that need to run between 3 to 14 days; with unrestricted handling the rest. Also for new partitions owned by specific groups we will be instituting a default 3 day time limit, existing partitions are not impacted and groups may change this default to suit their needs.

Software Changes

FASRC will reduce the number of precompiled software packages that it hosts, distilling this down to the necessities of compilers, any commercial packages, apps needed for VDI, etc.

FASRC will reduce the number of precompiled software packages that it hosts, distilling this down to the necessities of compilers, any commercial packages, apps needed for VDI, etc.

We will reduce our dependence on the Lmod modules and provide end-users with more and expanded options for building and deploying the software they need. We will incorporate the use of Spack to give users more power to build the software and versions they need. Those who came to FASRC from other HPC sites may recognize this as the norm at many sites these days.

There will be a Singularity image to allow those who need it to run a CentOS 7 image until they can transition away from it: https://docs.rc.fas.harvard.edu/kb/centos7-singularity/

If you load modules in your .bashrc or other startup script, you will likely need to modify this as some modules will no longer be available or will have changed. This is why trying out the test cluster ahead of time is important. Software you have compiled may need to be re-compiled, as an example.

FASRC has a Rocky Linux test environment available where groups can begin to test their software, codes, jobs, etc. Please see the Rocky 8 Transition Guide for details.

Regarding OOD/VDI: The Open OnDemand instances for Cannon will involve a change to each user's settings and the location of the folder containing those settings and other OOD-specific files. This also means that values you had filled out in the OOD start form will not be retained. Form values will revert to the defaults.

The new location in your Cannon home directory will be ~/.fasrcood (This does not affect FASSE OOD users which continues to use ~/.fasseood)

Training, Office Hours, and Consultation

Starting in April, FASRC will, in addition to our regular New User training and other upcoming classes, will be offering training sessions on:

- From CentOS7 to Rocky 8: How the new operating system will affect FASRC clusters

Links to this town hall meeting and its slides can be found at https://docs.rc.fas.harvard.edu/kb/rocky-8-transition-guide/ - Installing and using software on the FASRC cluster

- Class dates TBD

- Special office hours will be held June 15th, 22nd, and 29th: https://www.rc.fas.harvard.edu/training/office-hours/

Additionally, we will be providing opportunities for labs and other groups to meet with us to discuss your workflows vis-a-vis the upgrade and changes. Please contact FASRC if your lab would like to schedule a consult.

FASRC has a Rocky Linux 8 test environment available where groups can begin to test their software, codes, jobs, etc. This includes Cannon and FASSE users and OOD/VDI. Please see the Rocky 8 Transition Guide for details.