| 2020 - A year full of changes |

2020 began somewhat normally, except that the world was coming to grips with a global pandemic. By February plans were being formed for a probable change to 100% remote work for FASRC staff. This became a reality in March and we bid our offices in '38' farewell for some indeterminate time. This drastically altered any on-campus work, as well as severely limiting our data center access due to state and university policies. This would soon be problematic as we had a data center consolidation project that was expected to start in the summer. And now we had to, as the saying goes, 'wing it' as soon as a window opened.

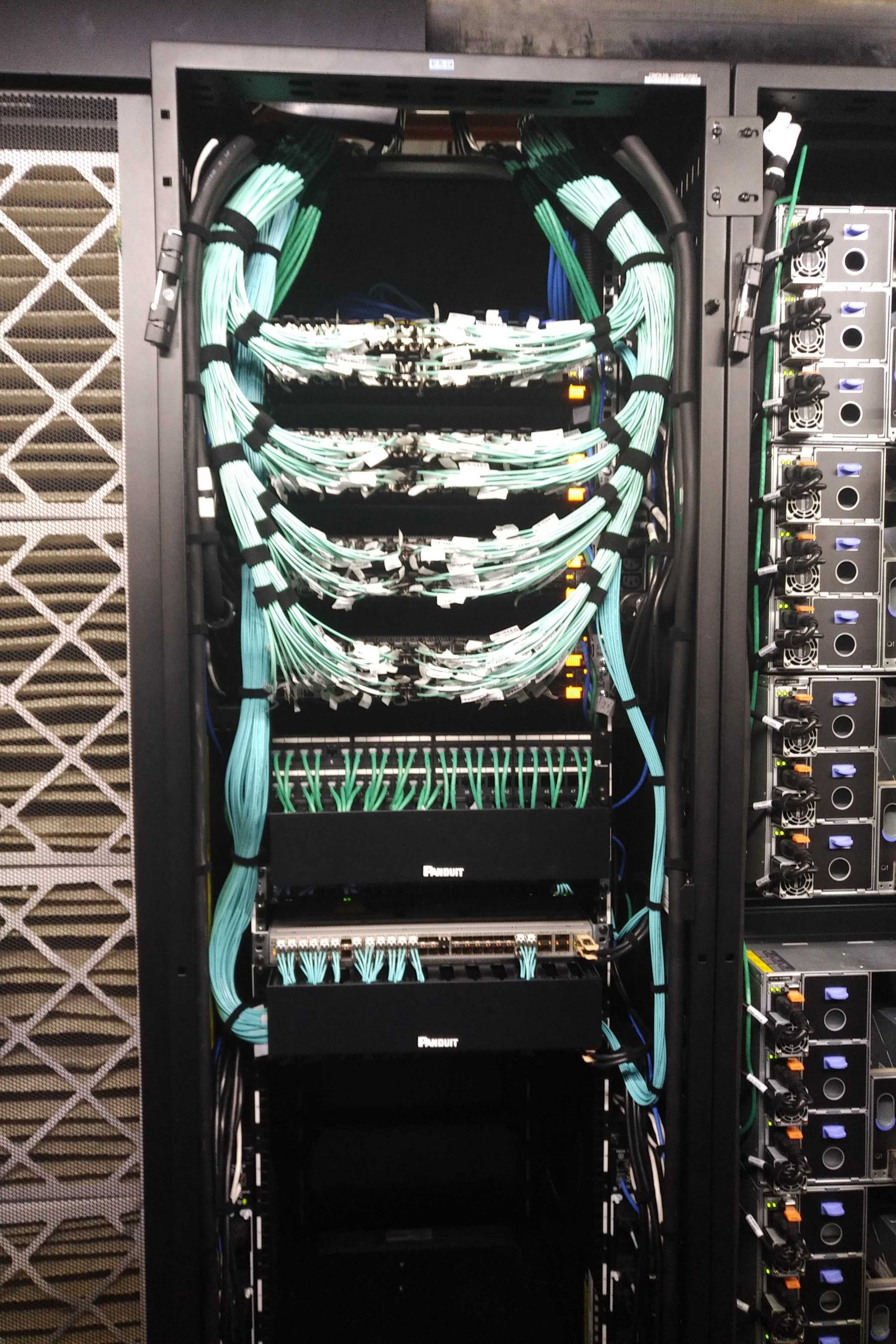

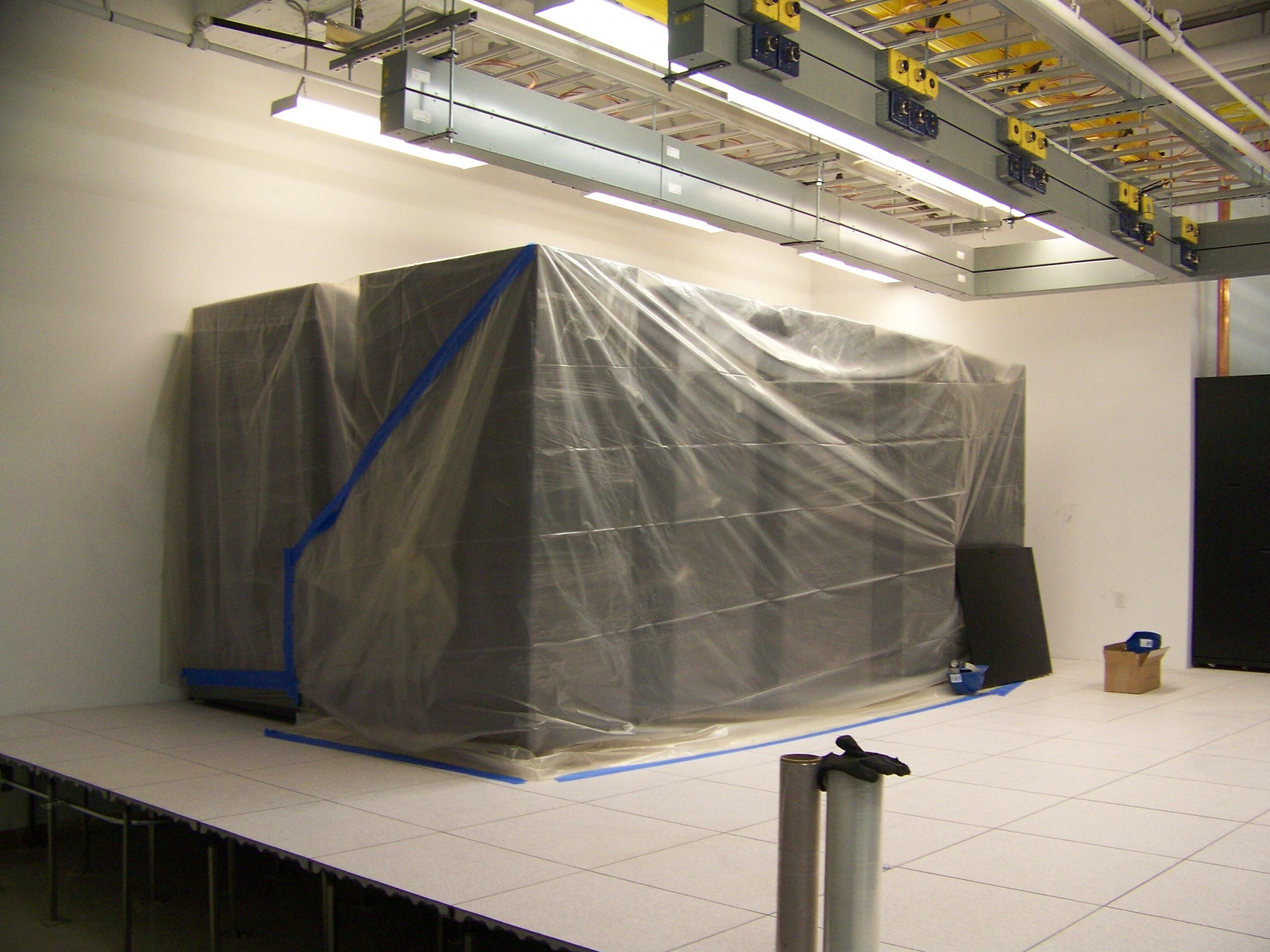

The plan was to consolidate our floor in the Boston data center with HMS's floor; both of us in one space. This would result in both significant savings for the university, approximately $1.2M a year, and also presented an opportunity to decommission some older gear, mostly older storage servers whose occupants could be moved to newer Lustre or CEPH storage. A lengthy summer/fall move and post-summer clean-up was no longer possible, and the crux of the matter was a looming deadline: We had to be out by December. In the end, the whole project was compressed into ~ September - November. And thanks to herculean efforts by the whole team - especially those doing share migrations and those doing the physical un-racking, de-cabling, carting, moving, and recycling - we managed to pull it off.

More than 100 lab shares were migrated to newer storage systems as quickly as was reasonable (and thank you all for your cooperation and understanding!) and approximately 18 racks full of gear were decommissioned. We moved thousands of pounds of servers and racks to the new space and/or storage, and sent 7 pallets, comprising literal tons of out-dated servers, to our e-waste recycler as of Nov. 25th. That includes 863 drives, 19 network devices, 6 racks, 128 servers, and 57 storage shelves.

By the end of November... we were out. The space was clean and each row contained nothing but the power panels and in-row coolers. In hindsight it still seems impossible, but thanks to the planning and professionalism of our staff, nearly nine months of work was compressed into three.

And did I mention there was a pandemic on, everyone was working from home, and we also brought a separate academic cluster for courses online tied to Canvas, and had a major yearly data center power maintenance event in our Holyoke data center in the middle of all this?

2020 has been a difficult year, but also a rewarding one. Cannon has processed millions of job/hours for our researchers, we've turned up some exciting services and technologies, and our team has somehow managed to remain close and productive despite the distances that separate us.

Here's to a happy and productive 2021.

| 2019 - Cluster refresh - Cannon goes live |

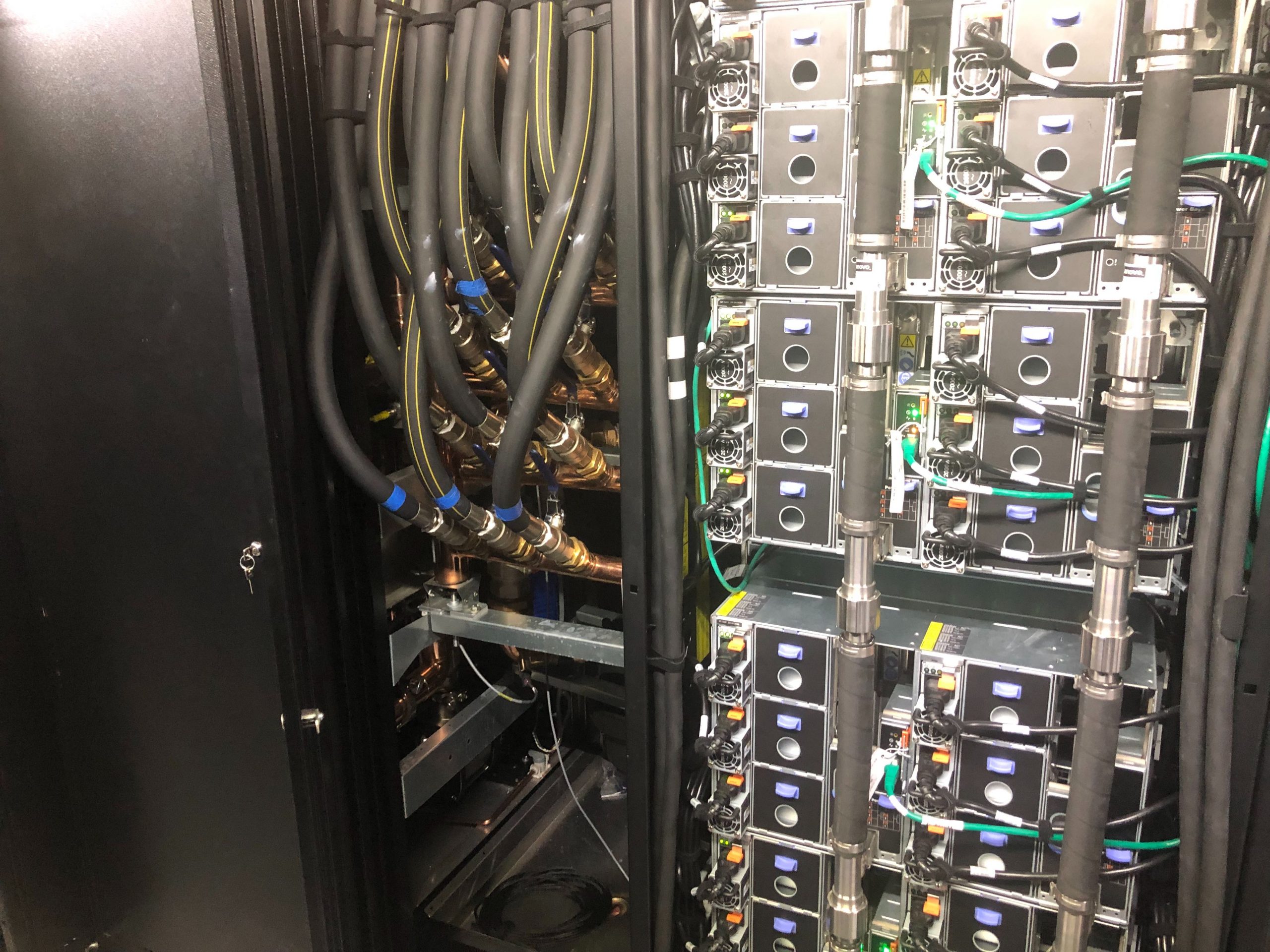

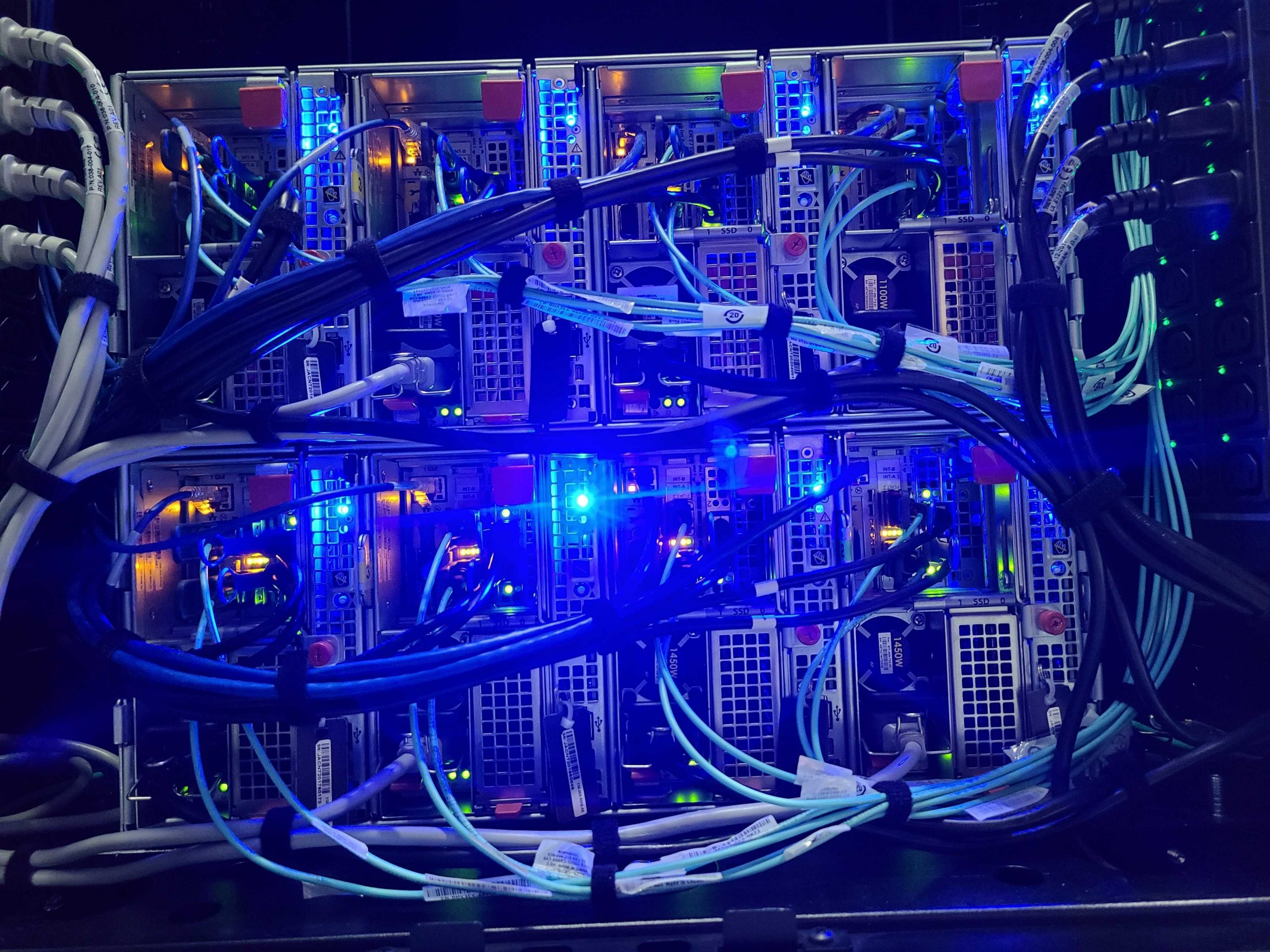

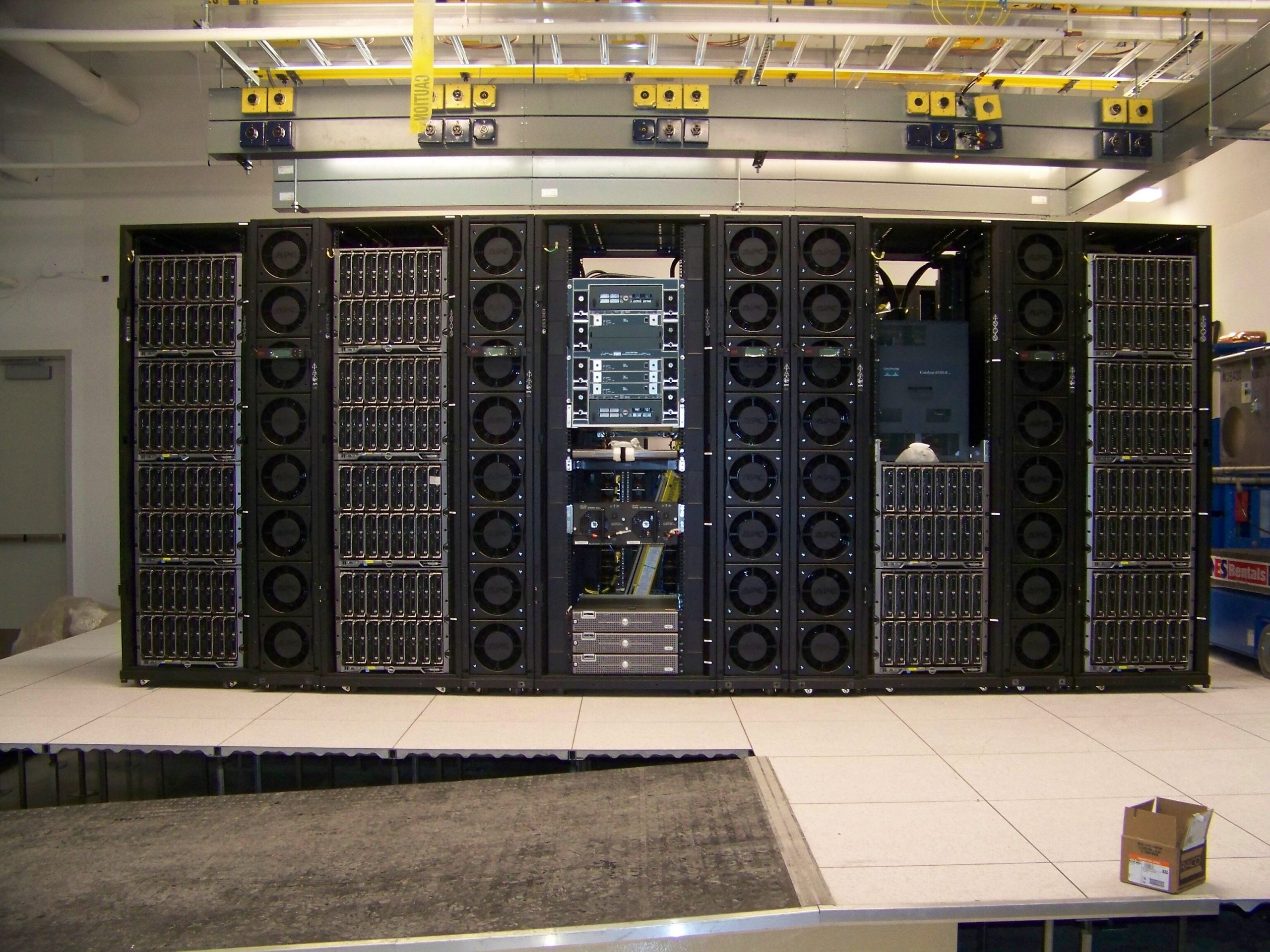

2019 was also a year of change where the cluster was concerned. You see, our cluster compute is leased , which allows us to refresh the entire cluster approximately every three years. So in 2019 Odyssey 2 was replaced by Cannon, a state-of-the-art water-cooled cluster of Lenovo computer and GPU servers.

Cannon wasn't just an incremental speed jump, its 2.076 PetaFLOPs performance was enough to place us #7 on the Top 500 Supercomputing list for academia and #144 for the entire list. Additionally, Cannon brought additional benefits in our LEED Platinum data center as less-efficient air cooling of the cluster was augmented by the more-efficient water-cooling system. And no, we've never had so much as a single leak thanks to very clever engineering and near-foolproof interconnects.

All this speed didn't come without speedbumps. A scratch storage appliance which was meant to replacing our slower previous filesystem failed to live up to its promises. Cannon and the users' jobs running on it could easily overwhelm the scratch filesystem and bring it to heel. After much triage and back-and-forth with the vendor, our solution ended up being one that has served us well in previous storage: We built our own. And I'm pleased to say that holyscratch01 has done an admirable job of keeping up with Cannon's raw power.

And speaking of storage, it kept growing. From 2018 to 2020 we've expended by many, many petabytes. In the last 6 years we went from 14PB to well over 45PB. And we'll add even more in 2020.

| 2017 - Odyssey 3 |

In 2017 Odyssey 3 was brought online, our second cluster refresh. This refresh added 15,000 Intel cores to the existing cluster and greatly increased our job capacity. As with most years, we also added significant amounts of storage. Our in-house built scratch storage was still performing admirably, but starting to show its age once the cluster's power increased.

This year also saw the closing of Harvard's on-campus data centers. While we had enjoyed the luxury of having three data centers across which we could have more redundancy, the closing of 60 Oxford meant retooling some of our primary systems (login, authentication, VPN, etc.) to rely more on our Boston and Holyoke DCs. Thankfully, HUIT was able to provide us a bit of space in the Science Center machine room, so we were actually able to maintain some of that triple redundancy for our most critical user-facing systems.

In 2018 Odyssey 3 was upgraded to CentOS 7 and added support for containerization. Our virtual desktop system was also getting an overhaul as we moved from the end-of-life'd NX to Open OnDemand (aka VDI/OOD).

| 2013 - Odyssey expands at MGHPCC |

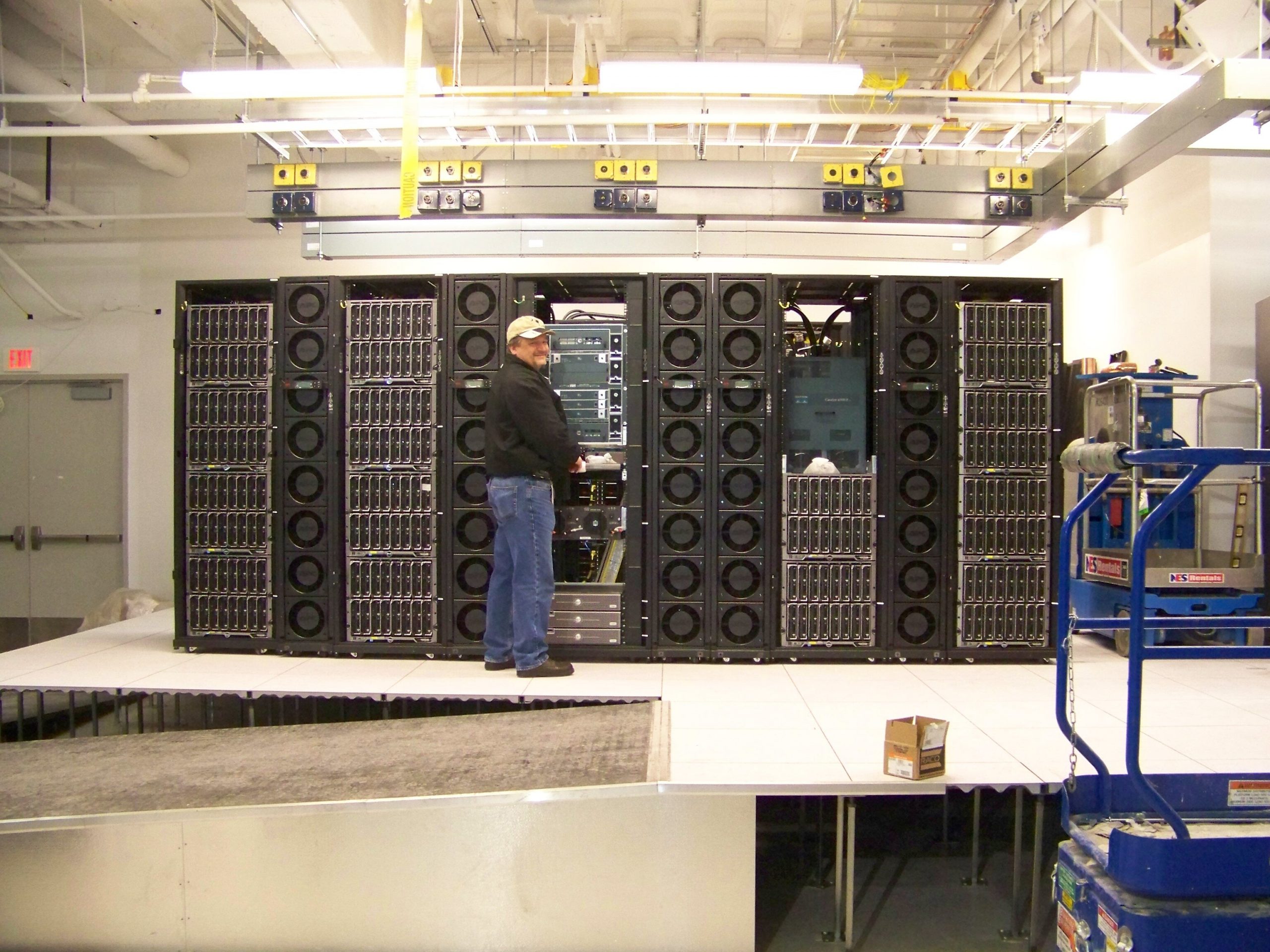

With MGHPCC completed and ready to power up, Odyssey 2 moved in. The new cluster came online in 2013 and consisted of 28,000 AMD Abu Dhabi cores. A major leap from Odyssey 1's 4096. Two fibre links back to Boston and Cambridge allowed for redundant interoperability. We were really in the HPC game now.

| 2011 - Construction of MGHPCC begins |

In 2011 we entered into an agreement with other area organizations and universities to build a green data center in Holyoke Massachusetts. This DC would be a 'hot' facility, meaning each area (or Pod) would not be cold, as most people expect, but run at a variable ambient temperature with hot air extracted from each double row's 'hot aisle'. And the facility would make use of local water resources, green power, flywheel power management, and other techniques which would eventually gain a LEED Platinum certification. Platinum is the highest rating a facility like MGHPCC can attain. And that's a big deal for a facility which is essentially converting electricity into heat and data.

MGHPCC's construction and outfitting was completed in November of 2013.

| 2008 - Odyssey is installed in our Boston Data Center |

In 2008 Odyssey 1, consisting of 4096 cores, was installed at our Boston data center. FASRC was becoming a 'thing' and jumping in the HPC pool with both feet.

| 2007 - The odyssey begins in the Life Sciences Division (LSDIV) |

What would eventually become FASRC was formed in the Life Sciences Division around 2007. Mike Ethier and Luis Silva who were part of that small team are still with us today. Servers were installed in the now-defunct Converse Hall server room to run everything from compute jobs to email.

Copyright © 2020. All Rights Reserved.

Information about how to reuse or republish this work may be available at Attribution.